|

Amirshayan Nasirimajd I'm a research fellow at Politecnico di Milano in Milan, Italy, where I work on ARISE, and ENFIELD projects as a part of EU projects. I received my master's degree in Data Science and Engineering from Polytechnic University of Turin, where my thesis was ''Sequential Domain Generalisation for Egocentric Action Recognition''. During my master's degree I was able to win Epic@CVPR challenge during CVPR 2023. In the Polytechnic University of Milan I am working on application of Computer Vision and AI in human-robot interaction for manufacturing purposes. |

|

ResearchI'm interested in computer vision, video understanding, multi-modal learning and robotics. Most of my research is about use of vision language models to improve domain generalization and adaptation for egocentric action recognition: |

|

Sequential Domain Generalisation for Egocentric Action Recognition

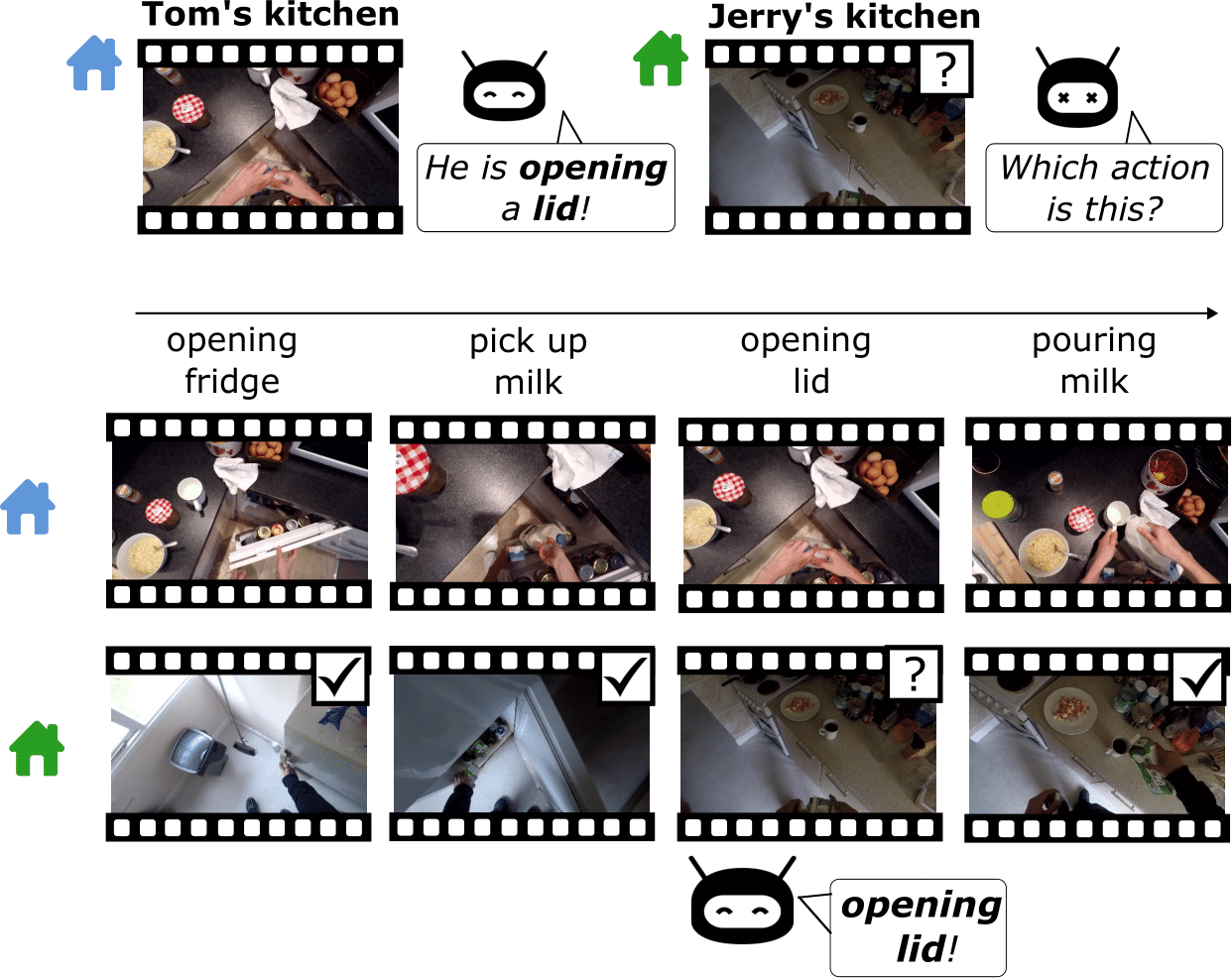

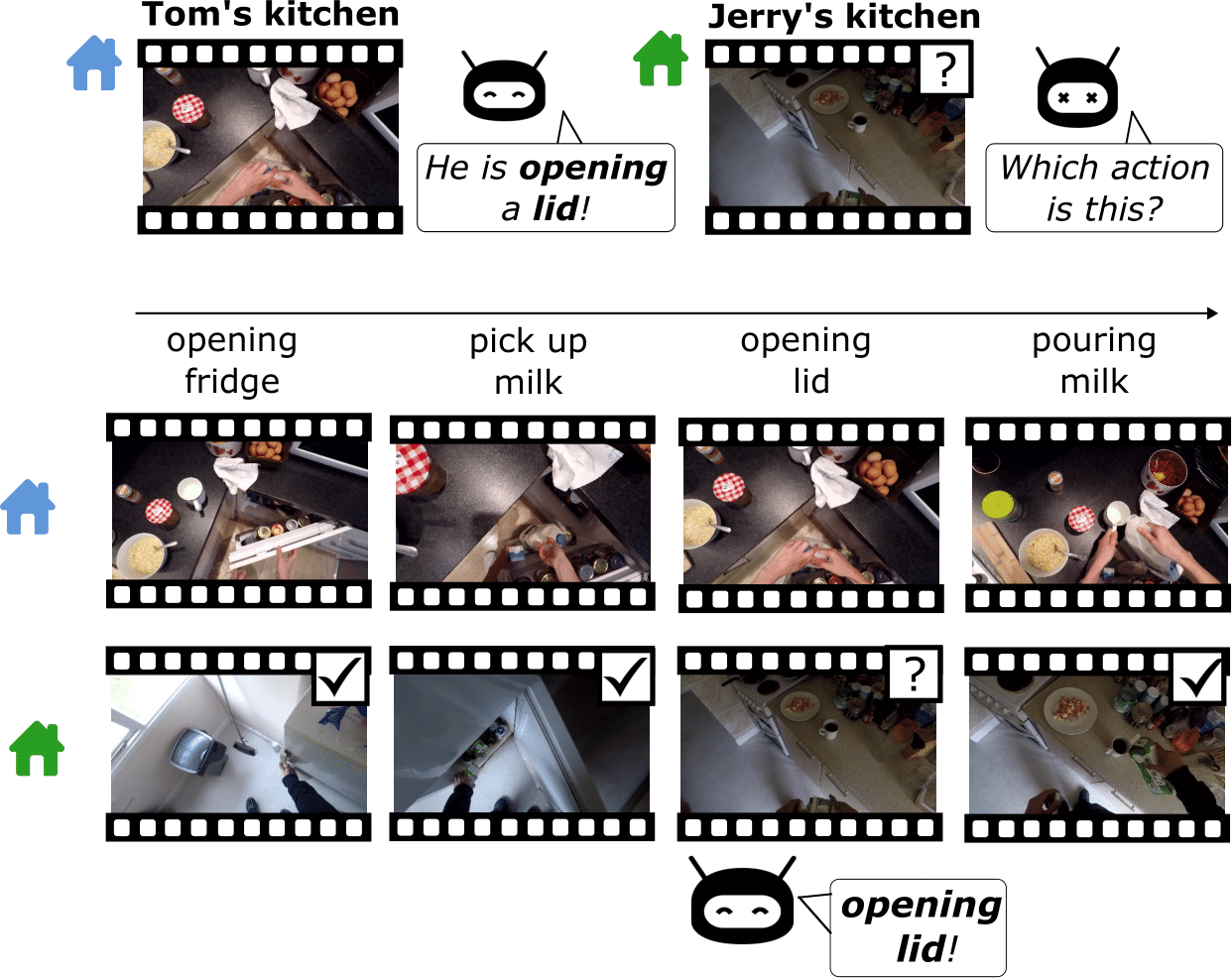

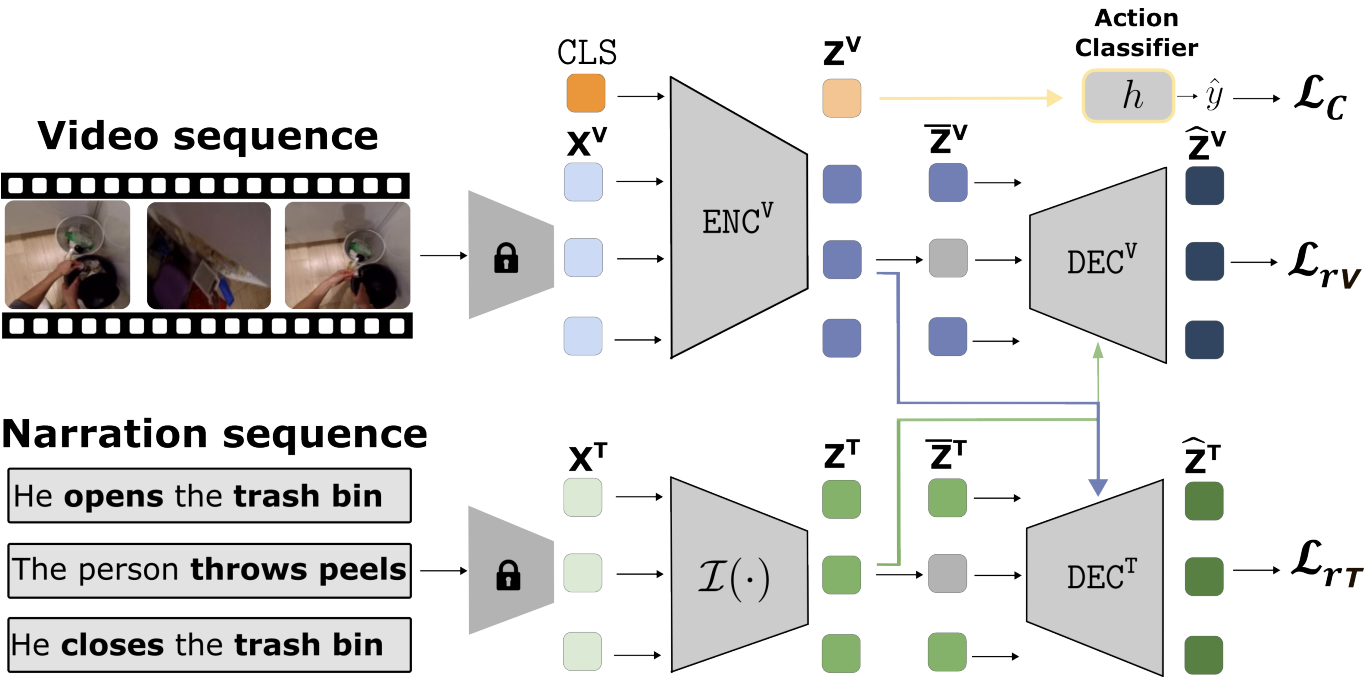

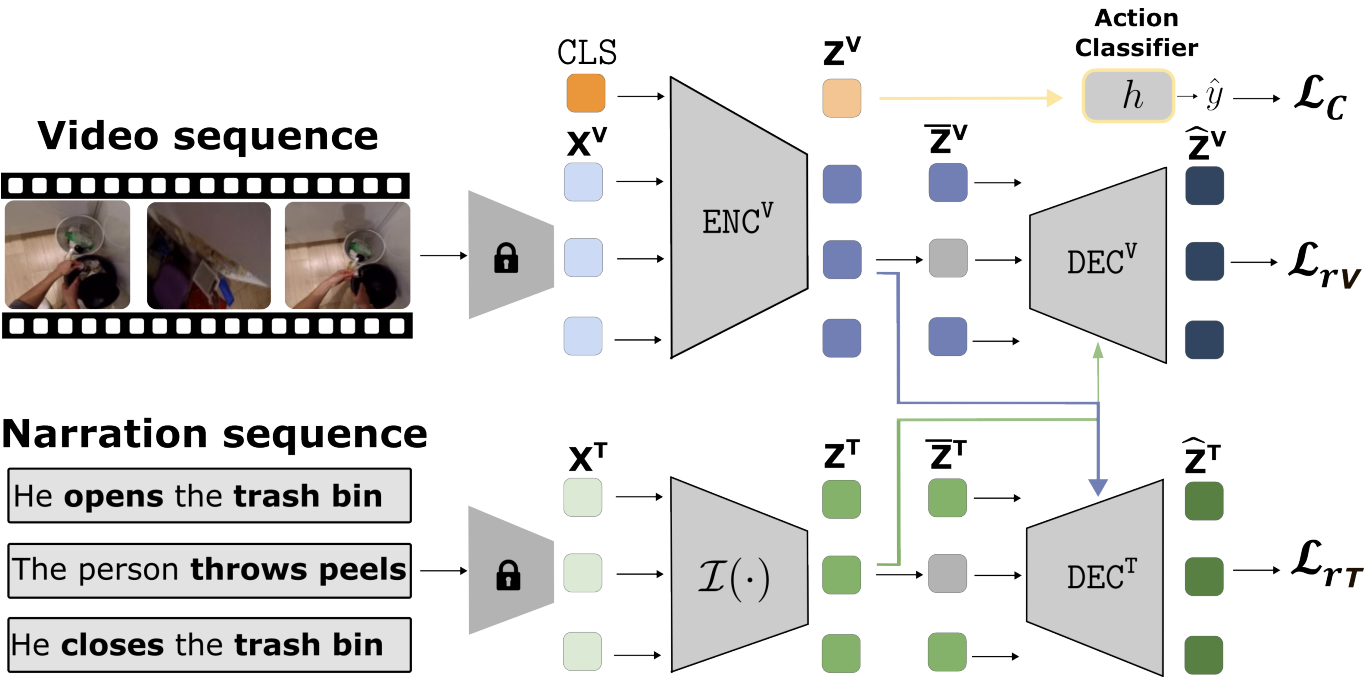

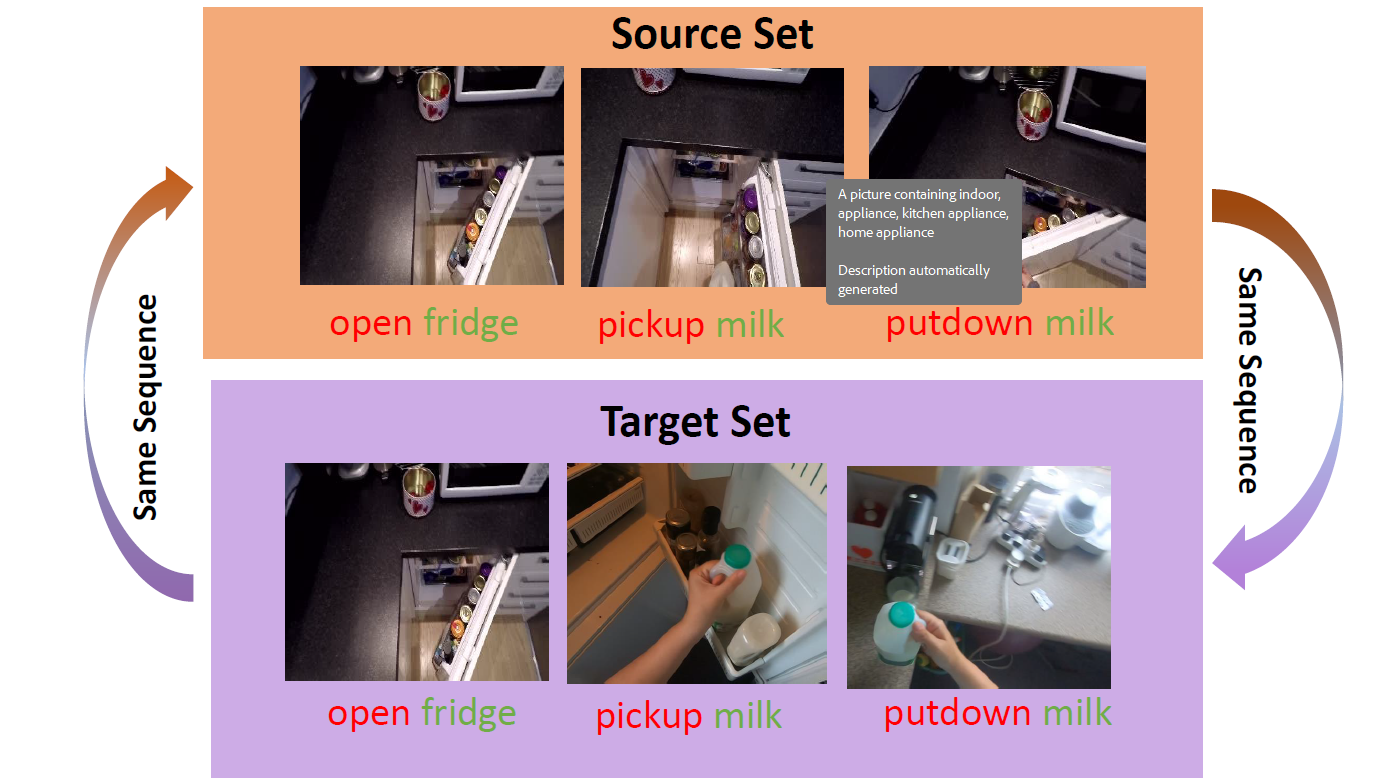

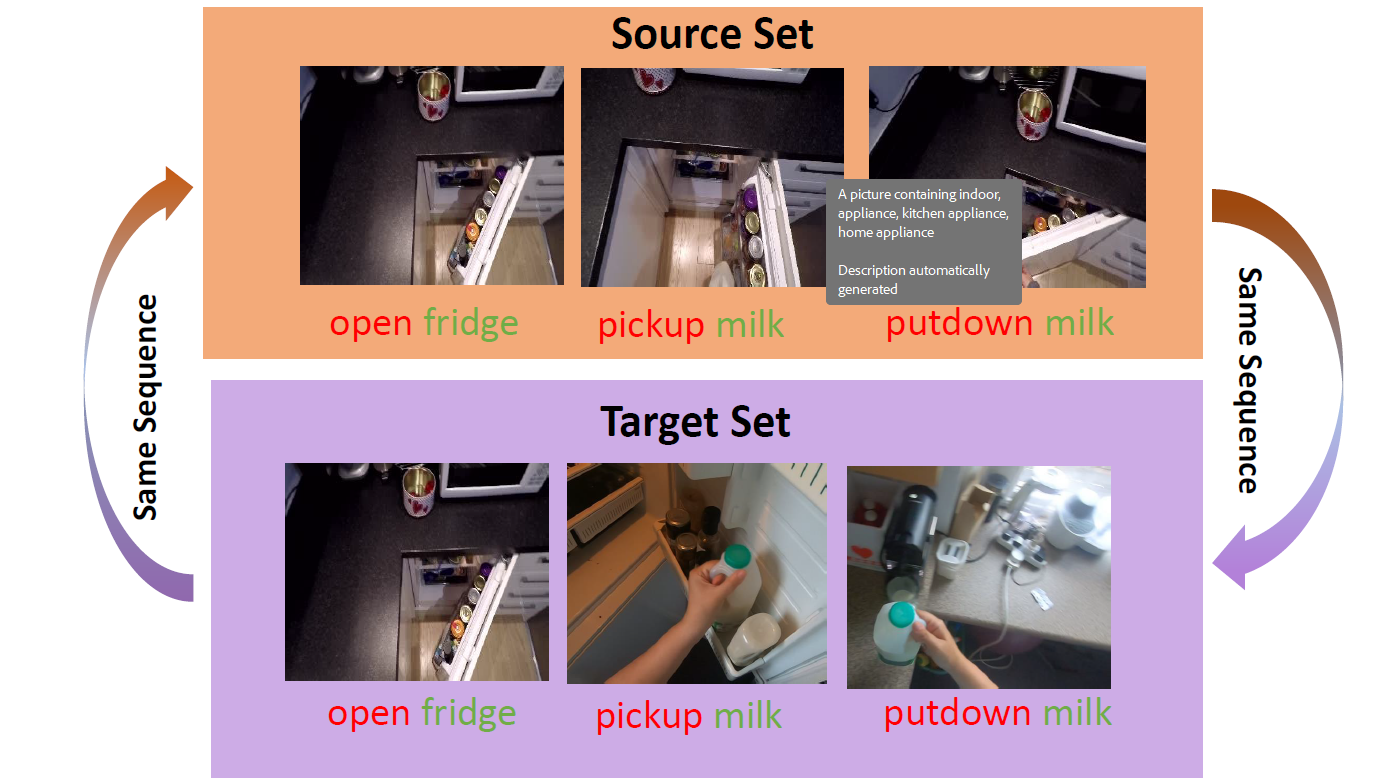

Amirshayan Nasirimajd, Chiara Plizzari, Simone Alberto Peirone, Marco Ciccone, Giuseppe Averta, Barbara Caputo Accepted at the Pattern Recognition Letters Journal, 2025 Paper Link, Project Website Recognizing human activities from visual inputs, particularly through a first-person viewpoint, is essential for enabling robots to replicate human behavior. Egocentric vision, characterized by cameras worn by observers, captures diverse changes in illumination, viewpoint, and environment. This variability leads to a notable drop in the performance of Egocentric Action Recognition models when tested in environments not seen during training. In this paper, we tackle these challenges by proposing a domain generalization approach for Egocentric Action Recognition. Our insight is that action sequences often reflect consistent user intent across visual domains. By leveraging action sequences, we aim to enhance the model’s generalization ability across unseen environments. Our proposed method, named SeqDG, introduces a visual-text sequence reconstruction objective (SeqRec) that uses contextual cues from both text and visual inputs to reconstruct the central action of the sequence. Additionally, we enhance the model’s robustness by training it on mixed sequences of actions from different domains (SeqMix). We validate SeqDG on the EGTEA and EPIC-KITCHENS-100 datasets. Results on EPIC-KITCHENS-100, show that SeqDG leads to +2.4% relative average improvement in cross-domain action recognition in unseen environments, and on EGTEA the model achieved +0.6% Top-1 accuracy over SOTA in intra-domain action recognition. |

|

Sequential Domain Generalisation for Egocentric Action Recognition

Amirshayan Nasirimajd, Master's Degree Thesis, 2024 Webthesis.POLITO In this thesis, we present Sequential Domain Generalisation (SeqDG), a reconstruction-based architecture to improve the generalization of action recognition models. This is accomplished through the utilization of a language model and a dual encoder-decoder that refines the feature representation. |

|

EPIC-KITCHENS-100 Unsupervised Domain Adaptation Challenge: Mixed Sequences Prediction

Amirshayan Nasirimajd, Simone Alberto Peirone, Chiara Plizzari, Barbara Caputo IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshop, 2023 CVPR Oral Presentation / arXiv The Winner of EPIC-Kitchens-100 Unsupervised Domain Adaptation (UDA) Challenge in Action Recognition. Our approach is based on the idea that the order in which actions are performed is similar between the source and target domains. Based on this, we generate a modified sequence by randomly combining actions from the source and target domains. As only unlabelled target data are available under the UDA setting, we use a standard pseudo-labeling strategy for extracting action labels for the target. We then ask the network to predict the resulting action sequence. This allows to integrate information from both domains during training and to achieve better transfer results on target. Additionally, to better incorporate sequence information, we use a language model to filter unlikely sequences. |